Introduction

We transition from mathematical description to engineering by using optimization techniques to rationally design the most efficient system that meets our specifications. In our case, we are trying to design a strain of yeast that produces a significant amount of vitamins relative to consensus daily value levels while minimizing the amount of enzyme that the cell needs to produce. Because we are optimizing a very nonlinear objective function in an arbitrary feasible region (parameter space), we investigated a series of nonlinear optimization algorithms with various properties.

In this discussion we consider optimizing the beta-carotene pathway. All analysis done for beta-carotene has been duplicated for l-ascorbate as well.

Objectives

We have used optimization techniques to answer a number of questions.

- What concentration of each enzyme should we attempt to attain in vivo in order to have optimal vitamin production?

- How much vitamin can we expect VitaYeast to produce under different constraints?

- What sort of resources will vitamin production demand from the cell?

- How will VitaYeast allocate its resources to produce vitamins optimally?

Multi-objective optimization

Motivation

We began by hypothesizing what sort of results we could expect from optimizing our pathway for maximum beta-carotene production. We quickly realized that we were in for a major problem: from the perspective of the optimization algorithm, adding more enzyme is always right thing to do. Adding enzyme might increase the pathway's speed and efficiency, but would never cause it to slow down. Thus, any decent algorithm would converge on a solution that pushed the enzyme concentrations to their upper bounds. Running optimization in this way would be trivial.

We know intuitively that as we demand increasing enzyme production from cells, we strain their resources until the cell viability and overall product output actually decrease. We do not have a quantitative model for this vague notion of "straining" our VitaYeast, so it cannot be incorporated directly into our simulation. We are left with needing to express the "strain" constraint in a more powerful and dynamic way than bounding and simple linear constraints. One solution is to maximize vitamin production while simultaneously solving the problem of minimizing "strain". Then, for a given level of strain, we know the optimal vitamin production level and vice-versa.

Pareto Front

Often optimization problems can be phrased as two competing objectives: get home quickly, but use the least gasoline; make the most widgets for the least amount of money. In some cases these problems condense down to a single objective function. For example, widgets can be sold for money, so the single objective function [number of widgets]*[revenue per widget] - [cost of widget production] can be used. When the objective functions cannot be condensed, Pareto frontiers provide a framework to think about the solution to such a problem. Pareto optimality occurs when an improvement in any objective causes a worsening of another objective. Pareto optimality comes from the field of economics, where the optimality condition is phrased as "when making any individual better off requires making someone else worse off". In most problems there exists a surface (the Pareto frontier) in the feasible region which represents the points for which Pareto optimality holds.

We are interested in solving the following multi-objective problem: how can we maximize the amount of vitamin produced while minimizing the extra nitrogen required to produce the enzyme. Nitrogen usage serves as a proxy for the general burden of making extra enzymes out of amino acids. We quickly calculated the nitrogen content of each enzyme in our pathways.

Execution

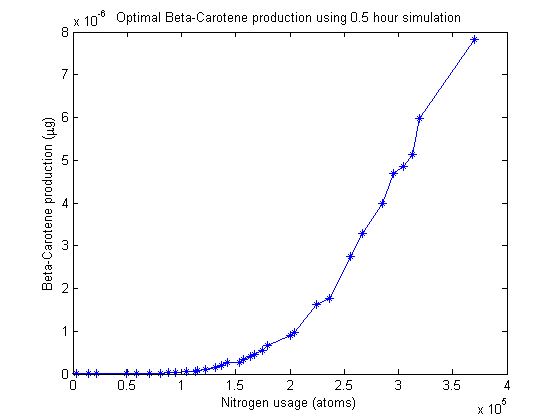

The first multi-objective optimizer we explored is Matlab's built-in genetic algorithm "gamultiobj", which is based on the NSGA-II algorithm. When we run this optimizer, it generates a population of 100 "individuals" which code for certain enzyme production levels. For each individual a 30-minute simulation of the pathway is run, yielding the beta-carotene production level. A separate function figures out how much nitrogen was used. Combined, the beta-carotene production and nitrogen usage constitute that population member's "fitness". The algorithm keeps fit individuals and "breeds" them.

Philosophical side-note:

Consider the fact that we are using a genetic algorithm to determine which strain of yeast is the most fit with respect to vitamin production. In some sense, we are simulating not just the vitamin production pathway, but the entire natural history of a synthetic yeast strain, which, until recently, has only existed in silico. Synthetic biology is about bridging the biological-computational gap in both directions: making cells that can compute and making computers that evolve.

After simulating the real-time equivalent of years, the algorithm returns 100 individuals that approximate the Pareto frontier. No individual can make more beta-carotene without spending more nitrogen.

Allocation analysis

Introduction

The graphic above tells us a lot about what goes in (nitrogen) and what comes out (beta-carotene) of our system, but we are left with somewhat of a black box. We know how much nitrogen gets used and that this usage is optimal, but how does our virtual cell choose to allocate this nitrogen between the various enzymes? What determines this choice? Since the optimizer explicitly minimizes nitrogen usage and maximizes beta-carotene production, it seems reasonable that our virtual cell is trying to balance each enzyme's usefulness in synthesizing beta-carotene with the nitrogen cost to produce it. Perhaps one of these two factors dominates the decision.

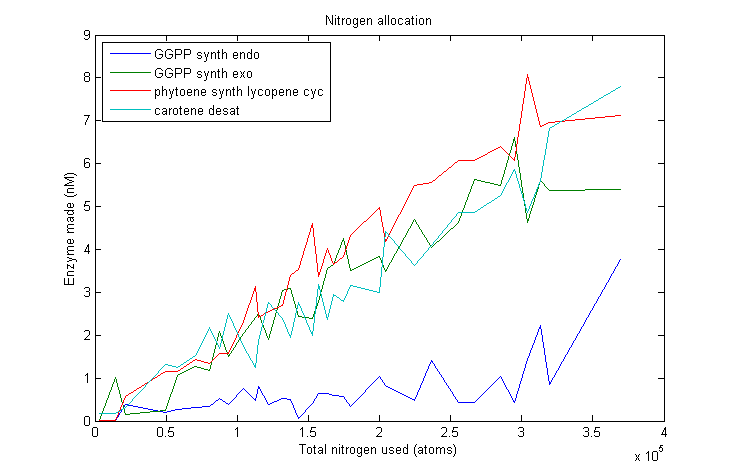

Lets start by looking at how much of each enzyme simulated VitaYeast made as we gave it more nitrogen to work with.

As we can see, this plot is pretty noisy. For marginal increases in nitrogen usage, the simulated VitaYeast seems to shift the allocations with a bit of randomness. We interpret this as a result of the model not being very nitrogen content sensitive to the amounts of enzyme made. Consider adding 1000 nitrogen atoms to the system. That's a difference of only one or two enzyme molecules no matter how it is allocated. The difference between adding it to GGPP_synth_endo versus GGPP_synth_exo is likely erased by rounding error. Thus, for small increases in nitrogen allocation, the simulated VitaYeast is indifferent to how it is allocated, resulting in noise.

Marginal allocation

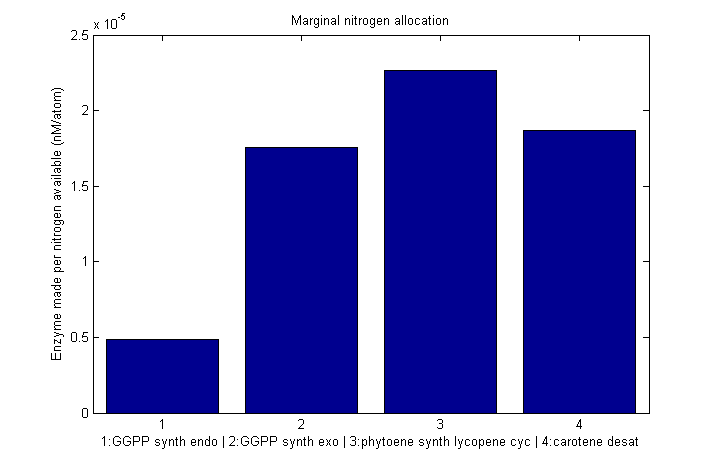

Despite the noise, we still see clear overall trends, such as GGPP_synth_endo getting the short end of the stick. We make these trends explicit with a linear fit to each of the curves above. The slope of the fitted curves is the marginal nitrogen allocation, or the portion of each additional unit of nitrogen allocated to each enzyme. A good way to summarize VitaYeast's allocation decisions is to look at the ratios of the marginal allocations below.

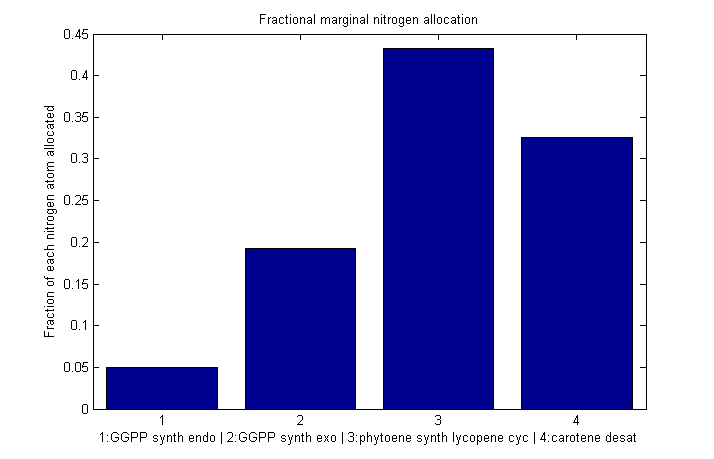

Normalized marginal allocation

These ratios represent the amount of enzyme made, not the actual nitrogen allocation. For example, every molecule of phytoene-cyclase/lycopene-synthase made requires almost twice as much nitrogen as each molecule of endogenous GGPP synthase. To understand exactly where the nitrogen is going, we need to examine the molecule-wise allocation of nitrogen.

Conclusions

We initially set out to use our optimization results to solve for the genetic construct we would need in order to reproduce optimal VitaYeast in vitro. We have gotten as far as finding the optimal enzyme concentrations needed, but developing an accurate model of enzyme expression from DNA is tricky. VitaYeast uses enzymes not native to yeast. How quickly do these enzymes degrade in their new host? How stable is their mRNA? Without this information, we have little hope of figuring out how to fine-tune our enzyme expression levels. The best we can do is to try to express all the enzymes in the right ratio. Looking at the marginal allocation data, we can see which genes we might be interested in including multiple copies of or using stronger promoters. We believe this rough approximation would be a significant improvement over simply placing all genes in identical constructs. Despite the inability to suggest precise expression parameters, our analysis gave us a good deal of insight into the workings of our pathway. Perhaps this analysis could be applied to natural pathways and larger-scale metabolic networks in order to understand the allocation decisions that drive them.

"

"